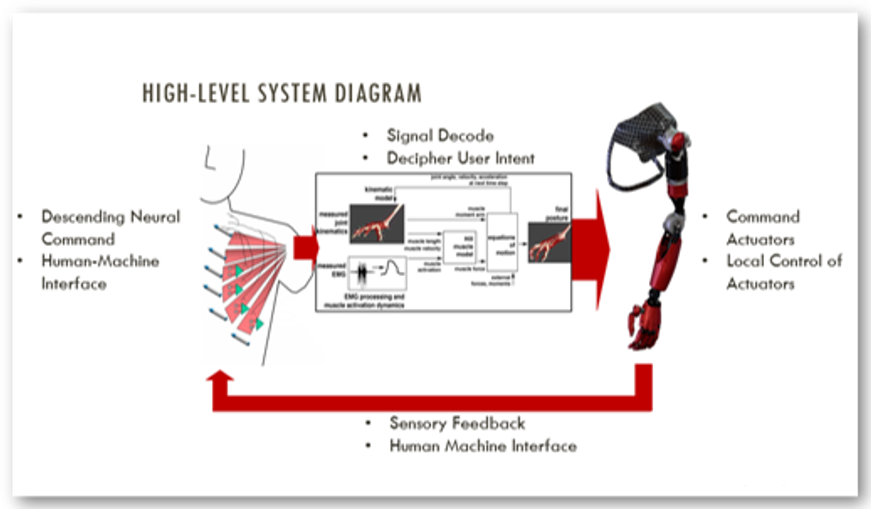

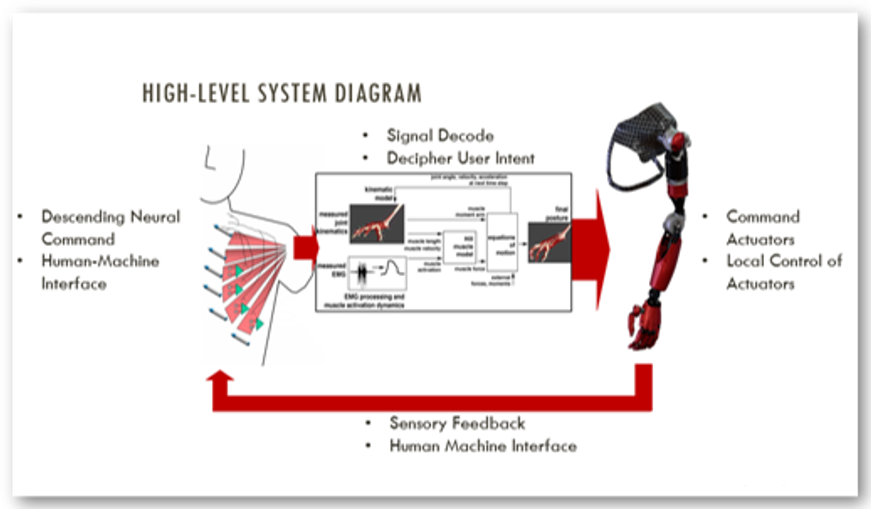

We record electromyogram (EMG) signals from the body, feed them into a microprocessor, decide on a motion, and then drive motors in a prosthetic hand. The high-level system diagram in Figure 1 shows how these steps flow together in proper operation of a prosthetic device.

Figure 1: A high-level system diagram showing the main four parts to operating a myoelectric prosthetic hand (Human Interfacing > Signal Decoding > Actuating > Sensory Feedback)

With the advent of increased computational power, many algorithms have been developed to handle complex calculations while producing answers rapidly. However, controlling a prosthetic hand is still one of the most difficult challenges in the field. It may be easy to have a hand open and close but extremely difficult to produce independent and simultaneous finger motions, even with the most advanced algorithms.

We struggle to control a complex mechanical hand because we are working with a limited number of EMG signal sites. Normally fine motor skills are controlled by over 30 muscles!1 We currently do not have access to that many independent control sites and therefore have to be creative in deciphering the user’s intent from what is available to work with. In contrast to directly programming a robotic hand, we instead have to guess what the human wants to do. This is definitely a challenge since the algorithm must guess quickly and accurately every 100 milliseconds, otherwise the user will experience frustration due to a lag in response time2 (similar to experiencing lag in a video game).

Therefore, user acceptance is one of the main problems in the prosthetics field. Specifically, if the device does not function as close to a real hand as possible, then the likelihood of the device being rejected increases significantly. We are constantly looking for ways to expand prosthetic hand capabilities by using available EMG signals and pattern recognition techniques to give back mobility.

.jpg?sfvrsn=d614d5ba_2)